This is another post on pyVmomi, which helps to automate vSphere operations using python . In this post I am sharing a script to create multiple linked clones. In previous post we discussed about how to get started with pyVmomi .

Using vSphere UI ( C# and Web Client) , linked clones cannot be created. Linked clone creation is only possible through API. The script mentioned in this blog can create multiple linked clones from an existing source VM.

The script is available @ https://github.com/linked_clone.py

The script is invoked as below

root@virtual-wires:~# python linked_clone.py -u <VC Username> -p <VC_password> -v <VC IP> -d <ESX_IP> –datastore <Datastore_name> –num_vms <Number of linked clone to be created> –vm_name <Source VM name>

Following are the different steps the script will do.

1. Validates the input parameters.

- Check for source VM

- Check for Destination datastore

- Check for Destination Host

- Check for support of snapshot on the source VM

2.Verifies the requirement for Linked clone

- Creates snapshot

- Checks the source VM datastore has access to destination ESX

- Creates the clone and relocate spec

3. Actual operations

- Takes snapshot on the Source VM

- Spawns the clone task

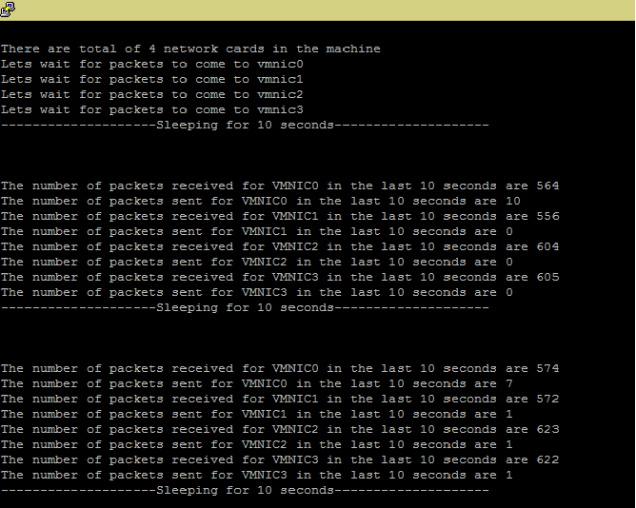

- Treats each clone as a thread and monitors the progress

One of the learning as part of this script was the use of threading module in python. It helps to invoke multiple clones and allows ability to track each threads task status.

for vms in range(0, opts.numvm): t = threading.Thread(target=linkedvm,args=(child, vmfolder, vms, clone_spec)) t.start()

In the above code, ‘target’ is the function which will be executed and ‘args’ are parameters to that function. This way we create a thread object ‘t’ and this start the thread using t.start().

Hope this will help to deploy multiple linked clones using pyVmomi.